Running Facebook's Open/R in EVE-NG

Table of Contents

Facebook announced their homegrown Open/R routing protocol a few years ago and eventually used the open-source software model to make it available to the general public. As a distributed network application, Open/R shares many fundamentals with traditional Dijkstra-based link-state routing protocols like IS-IS and OSPF. Upon announcement, there was much skepticism across the industry as to the need for yet another routing protocol. This article does not attempt to examine the positives or negatives of running a new routing protocol. Instead, I want to provide a simple overview of how easy it is to integrate Open/R into traditional networks.

Setting up the lab

Akshat Sharma from Cisco created a project that compiled Open/R onto a lightweight Ubuntu 16.04 VM running IOS- XR code. For this post, I will be using this VM as the base for working with Open/R in the EVE-NG hypervisor. You can acquire the VM by installing Vagrant on your host machine and using it to download the compiled Open/R VM. I am using a Mac to do this with Vagrant installed via Homebrew.

vagrant box add ciscoxr/openr-xr_ubuntu

After the VM is downloaded, I used the VMDK file in VirtualBox to make

some adjustments to suit this demonstration a

little better. One of the things I am changing in the base VM is enabling both

IPv4 and IPv6 routing and installing (but not enabling) FRRouting so

that it is available later. The first step is to log into

the VM as vagrant / vagrant, then enter sudo -s to perform the next

several actions as root.

sudo -s

# Enable IPv4 and IPv6 routing by editing /etc/sysctl.conf

# and uncomment the following two lines:

net.ipv4.ip_forward = 1

net.ipv6.conf.all.forwarding = 1

# Synchronize the configuration:

sysctl -p /etc/sysctl.conf

# Add the FRRouting details so that you can install from apt:

wget https://apps3.cumulusnetworks.com/setup/cumulus-apps-deb.pubkey

apt-key add cumulus-apps-deb.pubkey

echo "deb [arch=amd64] https://apps3.cumulusnetworks.com/repos/deb \

$(lsb_release -cs) roh-3" >> /etc/apt/sources.list.d/cumulus-apps-\

deb-$(lsb_release -cs).list

apt-get update && apt-get install frr

# Enable serial console support so you can telnet to the console in EVE-NG:

systemctl enable serial-getty@ttyS0.service

systemctl start serial-getty@ttyS0.service

# Edit /lib/systemd/system/networking.service and change

# the timeout value at the end of the file to 5sec. This

# is necessary because the environment was built in

# VirtualBox but is going to be run under QEMU on EVE-NG.

# Without changing this setting, the VM will take 5 minutes

# to boot:

TimeoutStartSec=5sec

# Optionally change the base hostname from "rtr1":

hostname openr

echo "openr" > /etc/hostname

echo "127.0.0.1 openr" >> /etc/hosts

echo "127.0.1.1 openr" >> /etc/hosts

# Edit /etc/rc.local so that Open/R runs automatically

# in the background on bootup. This avoids all the

# messages on the console Open/R produces. Add the

# following line before exit 0:

nohup /usr/sbin/run_openr.sh > /dev/null 2>&1 &

# We will also make some tweaks to the shell script that

# initiates Open/R. Edit /usr/sbin/run_openr.sh

# Comment out the PREFIXES line to prevent these specific

# prefixes from being advertised by default. We will add

# this in manually later.

# Locate the IFACE_PREFIXES line and change it to "eth":

IFACE_PREFIXES="eth"

After you have made these changes (along with whatever other customizations you want), shut down the VirtualBox instance and either clone or export it so that you get a VMDK file with the changes consolidated (VirtualBox will use the base VMDK and keep changes in snapshot files by default). Then copy the new VMDK file to your EVE-NG instance. I use scp for this:

scp box-disk0001.vmdk root@EVE-NG:/root

SSH to your EVE-NG instance, convert the VMDK to QCOW2 format for QEMU, and add it as a new Linux node type:

ssh root@EVE-NG

mkdir /opt/unetlab/addons/qemu/linux-openr/

qemu-img convert -f vmdk -O qcow2 box-disk001.vmdk \

/opt/unetlab/addons/qemu/linux-openr/hda.qcow2

rm box-disk001.vmdk

/opt/unetlab/wrappers/unl_wrapper -a fixpermissions

Now the new Linux node type is available in EVE-NG. When you add the nodes, I

recommend changing the RAM to 1024 MB, along with the number of NICs needed (I

just put in 10 whether I’ll actually use that many or not). Change the QEMU

custom options to -machine type=pc,accel=kvm -serial mon:stdio –nographic -boot order=c and the console type to telnet. Optionally change the name and

icon if you want. You can make these kinds of changes permanent if you want by

creating a new node profile.

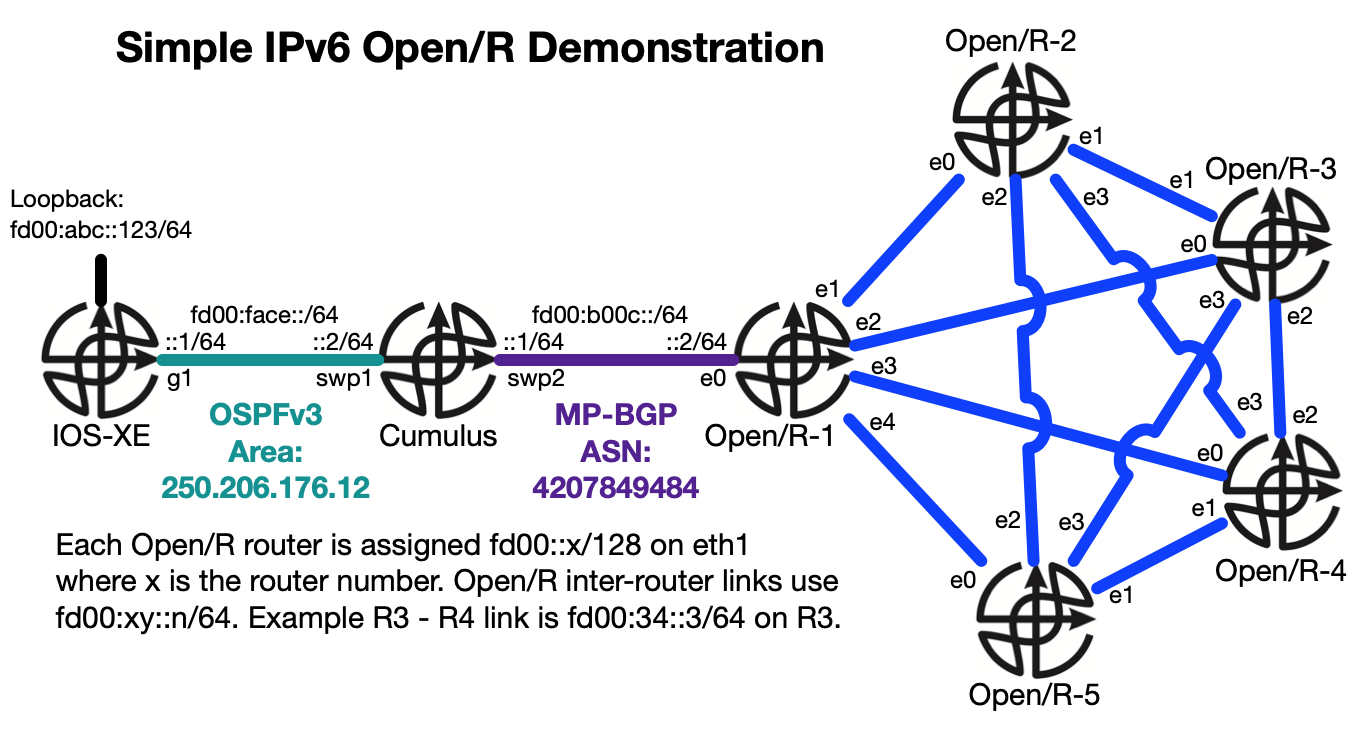

After adding the nodes and connecting according to the above topology, I made some further changes to each of the Open/R routers. For each router, I changed the hostname and created a shell script that will be called on startup to set the IP addresses on all of the interfaces. These are the changes I made to Open/ R-1. Routers 2 - 5 have similar changes with appropriate values:

sudo -s

hostname openr1

echo "openr1" > /etc/hostname

echo "127.0.0.1 openr1" >> /etc/hosts

echo "127.0.1.1 openr1" >> /etc/hosts

# Create the script /root/ip.sh containing the IP addresses

# for the router's interfaces:

#!/bin/bash

ip addr add fd00::1/128 dev eth1

ip addr add fd00:b00c::2/64 dev eth0

ip addr add fd00:12::1/64 dev eth1

ip addr add fd00:13::1/64 dev eth2

ip addr add fd00:14::1/64 dev eth3

ip addr add fd00:15::1/64 dev eth4

ip link set dev eth0 up

ip link set dev eth1 up

ip link set dev eth2 up

ip link set dev eth3 up

ip link set dev eth4 up

# Edit /etc/rc.local and add the IP script just before

# the Open/R line:

sh /root/ip.sh

Next, we edit the /usr/sbin/run_openr.sh script one more time to personalize

it even further. We will modify the script to advertise the local router’s /128

address on the eth1 interface, and to enable exclusive IPv6 support for this

lab. I discovered that if IPv4 is enabled in the script, then Open/R expects to

form neighbor adjacencies across IPv4 address and does not seem to fall back to

IPv6.

PREFIXES="fd00::1/128"

ENABLE_V4=false

Now let’s power-cycle the VMs. When you reboot, it causes the VM to crash for

some reason (once again probably because it was developed on VirtualBox, not

QEMU). Issue a shutdown now command, then power each node back on in EVE-NG.

The five Open/R routers should now form adjacencies over their directly-

connected links with IPv6, and each should advertise their eth1 /128 address,

and we can finally get started exploring Open/R a little bit.

Working with Open/R

Open/R was designed for massive distributed and meshed environments and is

meant to be interacted with programmatically. However, you can poke around with

a terminal window using the included breeze client. If you type “breeze” and

press the Enter key, you are presented with the sub-options to work with:

Commands:

config CLI tool to peek into Config Store module.

decision CLI tool to peek into Decision module.

fib CLI tool to peek into Fib module.

healthchecker CLI tool to peek into Health Checker module.

kvstore CLI tool to peek into KvStore module.

lm CLI tool to peek into Link Monitor module.

monitor CLI tool to peek into Monitor module.

perf CLI tool to view latest perf log of each…

prefixmgr CLI tool to peek into Prefix Manager module.

Let’s use the kvstore section to gather some information. Here you can see

which interfaces are enabled for Open/R:

vagrant@openr5:~$ breeze kvstore interfaces

openr5's interfaces

Interface Status ifIndex Addresses

----------- -------- --------- --------------------

eth0 UP 2 fe80::5200:ff:fe05:0

eth1 UP 3 fe80::5200:ff:fe05:1

eth2 UP 4 fe80::5200:ff:fe05:2

eth3 UP 5 fe80::5200:ff:fe05:3

Verify neighbor adjacencies:

vagrant@openr5:~$ breeze kvstore adj

openr5's adjacencies, version: 765, Node Label: 38493, Overloaded?: False

Neighbor Local Interface Remote Interface Metric Weight Adj Label NextHop-v4 NextHop-v6 Uptime

openr1 eth0 eth4 7 1 50002 0.0.0.0 fe80::5200:ff:fe01:4 2h49m

openr2 eth2 eth2 6 1 50004 0.0.0.0 fe80::5200:ff:fe02:2 2h49m

openr3 eth3 eth3 5 1 50005 0.0.0.0 fe80::5200:ff:fe03:3 2h49m

openr4 eth1 eth1 6 1 50003 0.0.0.0 fe80::5200:ff:fe04:1 2h49m

You can see that it is using link-local IPv6 addresses for adjacencies, just

like OSPFv3 and EIGRPv6 do. You can get further information on exactly how the

neighbors are connected via TCP with breeze kvstore peers. Next we’ll use the

breeze fib commands to view the number of Open/R routes, view the best paths,

and view all available paths:

vagrant@openr5:~$ breeze fib counters

== openr5's Fib counters ==

fibagent.num_of_routes : 4

vagrant@openr5:~$ breeze fib list

== openr5's FIB routes by client 786 ==

fd00::1/128 via fe80::5200:ff:fe01:4@eth0

fd00::2/128 via fe80::5200:ff:fe02:2@eth2

fd00::3/128 via fe80::5200:ff:fe03:3@eth3

fd00::4/128 via fe80::5200:ff:fe04:1@eth1

vagrant@openr5:~$ breeze fib routes

== Routes for openr5 ==

fd00::1/128

via fe80::5200:ff:fe01:4@eth0 metric 7

via fe80::5200:ff:fe02:2@eth2 metric 12

via fe80::5200:ff:fe03:3@eth3 metric 14

via fe80::5200:ff:fe04:1@eth1 metric 14

fd00::2/128

via fe80::5200:ff:fe01:4@eth0 metric 14

via fe80::5200:ff:fe02:2@eth2 metric 5

via fe80::5200:ff:fe03:3@eth3 metric 12

via fe80::5200:ff:fe04:1@eth1 metric 13

fd00::3/128

via fe80::5200:ff:fe01:4@eth0 metric 14

via fe80::5200:ff:fe02:2@eth2 metric 11

via fe80::5200:ff:fe03:3@eth3 metric 6

via fe80::5200:ff:fe04:1@eth1 metric 14

fd00::4/128

via fe80::5200:ff:fe01:4@eth0 metric 14

via fe80::5200:ff:fe02:2@eth2 metric 11

via fe80::5200:ff:fe03:3@eth3 metric 13

via fe80::5200:ff:fe04:1@eth1 metric 7

Next we’ll examine prefixmgr. This subcommand is used to advertise, withdraw,

and view the current status of what has been injected into the Open/R domain

from this node:

vagrant@openr5:~$ breeze prefixmgr view

Type Prefix

-------- -----------

LOOPBACK fd00::5/128

This shows that openr5 is specifically advertising fd00::5/128 which we

seeded in the /usr/sbin/run_openr.sh script in the PREFIXES stanza. Let’s

advertise the fd00:5::5/64 route:

vagrant@openr5:~$ breeze prefixmgr advertise fd00:5::5/64

Advertised 1 prefixes with type BREEZE

vagrant@openr5:~$ breeze prefixmgr view

Type Prefix

-------- ------------

BREEZE fd00:5::5/64

LOOPBACK fd00::5/128

To reiterate that this information is local only, let’s go to R3 and run the view command:

vagrant@openr3:~$ breeze prefixmgr view

Type Prefix

-------- -----------

LOOPBACK fd00::3/128

We see only R3’s locally-advertised prefix. However, the new route advertised on R5 is present in R3’s routing table:

vagrant@openr3:~$ breeze fib list

== openr3's FIB routes by client 786 ==

fd00:5::5/64 via fe80::5200:ff:fe05:3@eth3

fd00::1/128 via fe80::5200:ff:fe01:2@eth0

fd00::2/128 via fe80::5200:ff:fe02:1@eth1

fd00::4/128 via fe80::5200:ff:fe04:2@eth2

fd00::5/128 via fe80::5200:ff:fe05:3@eth3

vagrant@openr3:~$ ping6 fd00::5

64 bytes from fd00::5: icmp_seq=1 ttl=64 time=0.345 ms

64 bytes from fd00::5: icmp_seq=2 ttl=64 time=0.467 ms

vagrant@openr3:~$ ping6 fd00:5::5

From fd00:35::5 icmp_seq=1 Destination unreachable: No route

From fd00:35::5 icmp_seq=2 Destination unreachable: No route

As you would expect, just because the route is present doesn’t mean that address is actually reachable. Let’s actually add the address to an interface on R5 (the interface is arbitrary as long as it is up):

vagrant@openr5:~$ sudo ip addr add fd00:5::5/64 dev eth1

vagrant@openr3:~$ ping6 fd00:5::5

64 bytes from fd00:5::5: icmp_seq=1 ttl=64 time=0.688 ms

64 bytes from fd00:5::5: icmp_seq=2 ttl=64 time=1.00 ms

vagrant@openr2:~$ ping6 fd00:5::5

64 bytes from fd00:5::5: icmp_seq=1 ttl=64 time=1.29 ms

64 bytes from fd00:5::5: icmp_seq=2 ttl=64 time=0.470 ms

Metrics can be offset in the inbound direction on individual interfaces for traffic engineering:

vagrant@openr5:~$ breeze lm set-link-metric eth3 99999

Are you sure to set override metric for interface eth3 ? [yn] y

Successfully set override metric for the interface.

vagrant@openr5:~$ breeze lm links

== Node Overload: NO ==

Interface Status Overloaded Metric Override ifIndex Addresses

----------- -------- ------------ ----------------- --------- --------------------

eth0 Up 2 fe80::5200:ff:fe05:0

eth1 Up 3 fe80::5200:ff:fe05:1

eth2 Up 4 fe80::5200:ff:fe05:2

eth3 Up 99999 5 fe80::5200:ff:fe05:3

vagrant@openr5:~$ breeze fib routes

== Routes for openr5 ==

fd00::1/128

via fe80::5200:ff:fe01:4@eth0 metric 8

via fe80::5200:ff:fe02:2@eth2 metric 14

via fe80::5200:ff:fe03:3@eth3 metric 100006

via fe80::5200:ff:fe04:1@eth1 metric 15

fd00::2/128

via fe80::5200:ff:fe01:4@eth0 metric 16

via fe80::5200:ff:fe02:2@eth2 metric 6

via fe80::5200:ff:fe03:3@eth3 metric 100005

via fe80::5200:ff:fe04:1@eth1 metric 13

fd00::3/128

via fe80::5200:ff:fe01:4@eth0 metric 16

via fe80::5200:ff:fe02:2@eth2 metric 12

via fe80::5200:ff:fe03:3@eth3 metric 99999

via fe80::5200:ff:fe04:1@eth1 metric 13

fd00::4/128

via fe80::5200:ff:fe01:4@eth0 metric 16

via fe80::5200:ff:fe02:2@eth2 metric 12

via fe80::5200:ff:fe03:3@eth3 metric 100005

via fe80::5200:ff:fe04:1@eth1 metric 7

We can explore the metrics further by changing the network topology. I used the

sudo ip link set dev eth# down command on the five routers to bring down all

of the middle links to effectively create a ring topology. R1 now neighbors

with only R2 and R5, R2 only neighbors with R1 and R3, etc. Now you can see R3

has only two different ways to get to R5’s advertised route:

vagrant@openr3:~$ breeze fib routes

== Routes for openr3 ==

fd00:5::5/64

via fe80::5200:ff:fe02:1@eth1 metric 23

via fe80::5200:ff:fe04:2@eth2 metric 15

In the above example, the path through R4 is preferred. By looking at the topology, you can see that it’s because there are two links in the path through R4 to R5, and three links in the path through R2. You could manually set the interface metric on R4’s eth1 interface to influence downstream path selection:

root@openr4:~# breeze lm set-link-metric eth1 20

vagrant@openr3:~$ breeze fib routes

== Routes for openr3 ==

fd00:5::5/64

via fe80::5200:ff:fe02:1@eth1 metric 22

via fe80::5200:ff:fe04:2@eth2 metric 26

R3 now prefers the path through R2. Open/R also offers set-link-overload and

set-node-overload options to drain the traffic from a link or entire node,

respectively. This is useful when you need to service either the link or node

and wish to gracefully expire the current traffic and stop allowing new

connections.

By default, Open/R re-calculates metrics and propagates that information to respond to changing conditions. This is because of Open/R’s roots as a routing protocol for meshed wireless networks where RF conditions are constantly changing. Using EVE-NG, we can modify the link characteristics. On the R5 end of the link between R4 and R5, I set the delay to 1000ms, jitter to 50ms, and loss at 20%. Looking at the routing table on R4, it now prefers the route to R5 through R3, even though it is directly connected to R5:

root@openr4:~# breeze fib routes

== Routes for openr4 ==

fd00:5::5/64

via fe80::5200:ff:fe03:2@eth2 metric 27

via fe80::5200:ff:fe05:1@eth1 metric 10058

Integrating Open/R with traditional routing protocols

Now let’s turn our attention to integrating the Open/R domain with traditional routing protocols. Using FRRouting, we’ll configure MP-BGP running an IPv6 session between R1 and Cumulus. Likewise, we’ll configure OSPFv3 between Cumulus and a Cisco IOS device which advertises a prefix on its loopback interface.

On both R1 and Cumulus, we need to enable FRRouting:

#Edit /etc/frr/daemons

zebra=yes

bgpd=yes

ospf6d=yes #(Cumulus only, not needed on R1)

sudo service frr start

sudo vtysh

You are now placed into FRRouting’s command-line interface. FRRouting uses an “industry standard” CLI that should look familiar to most network engineers. Here are the relevant configurations for R1, Cumulus, and IOS-XE:

R1:

router bgp 4207849484

bgp router-id 1.1.1.2

no bgp default ipv4-unicast

neighbor fd00:b00c::1 remote-as internal

!

address-family ipv6 unicast

redistribute kernel

redistribute connected

neighbor fd00:b00c::1 activate

neighbor fd00:b00c::1 next-hop-self

exit-address-family

Cumulus:

interface swp1

ipv6 address fd00:face::2/64

!

interface swp2

ipv6 address fd00:b00c::1/64

!

router bgp 4207849484

bgp router-id 1.1.1.1

no bgp default ipv4-unicast

neighbor fd00:b00c::2 remote-as internal

!

address-family ipv6 unicast

redistribute connected

redistribute ospf6

neighbor fd00:b00c::2 activate

neighbor fd00:b00c::2 next-hop-self

exit-address-family

!

router ospf6

ospf6 router-id 1.1.1.1

redistribute connected

redistribute bgp

interface swp1 area 250.206.176.12

IOS-XE:

ipv6 unicast-routing

!

interface Loopback0

no ip address

ipv6 address FD00:ABC::123/64

ipv6 enable

!

interface GigabitEthernet1

no ip address

ipv6 address FD00:FACE::1/64

ipv6 enable

ipv6 ospf 1 area 250.206.176.12

!

ipv6 router ospf 1

router-id 2.2.2.2

redistribute connected

There’s one final piece of the puzzle to make this work. R1 is the gateway between the Open/R domain and the rest of the network. All the Open/R nodes behind R1 need to pass through it, which means we can simplify things with an Open/R default route to attract all unknown traffic behind R1 toward it:

vagrant@openr1:~$ breeze prefixmgr advertise ::/0

Advertised 1 prefixes with type BREEZE

Now let’s test reachability to IOS-XE’s loopback interface from R3:

vagrant@openr3:~$ breeze fib routes

== Routes for openr3 ==

::/0

via fe80::5200:ff:fe02:1@eth1 metric 14

via fe80::5200:ff:fe04:2@eth2 metric 19

vagrant@openr3:~$ ping6 fd00:abc::123

64 bytes from fd00:abc::123: icmp_seq=1 ttl=61 time=2.71 ms

64 bytes from fd00:abc::123: icmp_seq=2 ttl=61 time=1.97 ms

vagrant@openr3:~$ traceroute6 fd00:abc::123

traceroute to fd00:abc::123 (fd00:abc::123) from fd00::3, 30 hops max, 24 byte packets

1 fd00::2 (fd00::2) 0.702 ms 0.31 ms 0.274 ms

2 fd00::1 (fd00::1) 0.746 ms 0.729 ms 0.686 ms

3 fd00:b00c::1 (fd00:b00c::1) 1.386 ms 0.828 ms 1.501 ms

4 fd00:face::1 (fd00:face::1) 1.5 ms 1.16 ms 1.282 ms

Here’s the IPv6 routing table on Cumulus:

cumulus# show ipv6 route

B>* fd00::1/128 [200/0] via fe80::5200:ff:fe01:0, swp2, 00:05:52

B>* fd00::2/128 [200/1024] via fe80::5200:ff:fe01:0, swp2, 00:05:52

B>* fd00::3/128 [200/1024] via fe80::5200:ff:fe01:0, swp2, 00:05:52

B>* fd00::4/128 [200/1024] via fe80::5200:ff:fe01:0, swp2, 00:05:52

B>* fd00::5/128 [200/1024] via fe80::5200:ff:fe01:0, swp2, 00:05:52

B>* fd00:12::/64 [200/0] via fe80::5200:ff:fe01:0, swp2, 00:05:52

B>* fd00:15::/64 [200/0] via fe80::5200:ff:fe01:0, swp2, 00:05:52

O>* fd00:abc::/64 [110/20] via fe80::a8bb:ccff:fe00:700, swp1, 00:20:35

C>* fd00:b00c::/64 is directly connected, swp2, 01:36:30

O fd00:face::/64 [110/1] is directly connected, swp1, 00:19:46

C>* fd00:face::/64 is directly connected, swp1, 01:36:50

C * fe80::/64 is directly connected, swp2, 01:36:30

C * fe80::/64 is directly connected, swp1, 01:36:49

C>* fe80::/64 is directly connected, eth0, 01:41:56

And finally, the IPv6 routing table on R1:

openr1# show ipv6 route

C>* fd00::1/128 is directly connected, eth1, 01:45:54

K>* fd00::2/128 [0/1024] via fe80::5200:ff:fe02:0, eth1, 01:45:54

K>* fd00::3/128 [0/1024] via fe80::5200:ff:fe02:0, eth1, 01:45:54

K>* fd00::4/128 [0/1024] via fe80::5200:ff:fe05:0, eth4, 01:45:54

K>* fd00::5/128 [0/1024] via fe80::5200:ff:fe05:0, eth4, 01:45:54

C>* fd00:12::/64 is directly connected, eth1, 01:45:54

C>* fd00:15::/64 is directly connected, eth4, 01:45:54

B>* fd00:abc::/64 [200/20] via fe80::5200:ff:fe06:2, eth0, 00:06:17

C>* fd00:b00c::/64 is directly connected, eth0, 01:45:54

B>* fd00:face::/64 [200/0] via fe80::5200:ff:fe06:2, eth0, 00:06:17

C * fe80::/64 is directly connected, eth4, 01:45:54

C * fe80::/64 is directly connected, eth1, 01:45:54

C>* fe80::/64 is directly connected, eth0, 01:45:54

Open/R’s applicability beyond Facebook

As we have seen, it is fairly trivial to integrate Open/R into existing networks if you start with a working base (such as the one created by Akshat Sharma). Outside of Facebook, Open/R is still very much a developer-oriented project. While writing this article, I tried to compile it myself on top of a fresh Ubuntu 16.04 install, but kept encountering errors during the compilation process that I didn’t know how to resolve. But, like all new technologies, it’s just a matter of exposure along with technical merit before they gain popularity.

Open/R might not experience a meaningful life outside of Facebook’s hyperscale network, but I still think it is interesting that they are working to overcome their specific networking challenges and move beyond traditional network protocols. People can make the argument “if it’s not broken, don’t fix it”, and that may very well apply to most network environments. When you run distributed systems of any kind at such a large scale, however, it is exciting to me to know that new and potentially more creative ways are being researched and developed which could have future applicability across the majority of our industry.